When it comes to audio production and analysis, understanding audio waveforms is crucial. Audio waveforms are representations of sound in its purest form. They show the amplitude, frequency and phase of sound over time, allowing us to visualize and analyze it in great detail.

When it comes to audio production and analysis, understanding audio waveforms is crucial. Audio waveforms are representations of sound in its purest form. They show the amplitude, frequency and phase of sound over time, allowing us to visualize and analyze it in great detail.

Click here to try our free online Audio Waveform Viewer.

At its most basic, an audio waveform is a graph that displays the changes in air pressure over time. The horizontal axis represents time, while the vertical axis represents amplitude. As sound waves pass through the air, they cause variations in air pressure, which are then captured by microphones and translated into electrical signals that can be visualized as waveforms.

A solid understanding of audio waveforms is essential for anyone involved in audio production or analysis. This includes musicians, sound engineers and even speech analysts. By analyzing waveforms, one can determine the quality of a recording, identify any issues that need to be addressed and make adjustments to improve the overall sound quality.

Furthermore, understanding waveforms can help one manipulate and enhance the sound to achieve specific effects. For example, by applying filters and equalization, one can adjust the frequency response of a recording to emphasize particular frequencies and reduce others. This can result in a cleaner, more balanced sound.

In addition, knowing how to analyze waveforms can also help identify specific sounds and noises, such as those caused by interference or distortion. By recognizing these issues in waveforms, one can then take steps to eliminate them, resulting in a cleaner, more professional sound.

Click here to try our free online Audio Waveform Visualizer.

Understanding audio waveforms is essential for anyone involved in audio production or analysis. By learning how to read and manipulate waveforms, one can achieve greater control over sound and improve the overall quality of recordings.

When it comes to audio production and analysis, audio waveforms are an essential tool for understanding sound. In this section, we'll dive deeper into audio waveforms, their different types and how they are measured and analyzed.

As mentioned in the previous section, audio waveforms are the graphical representations of sound over time. They show the changes in air pressure that occur as sound waves propagate through space. The waveform's amplitude represents the intensity of the sound, while the frequency represents its pitch.

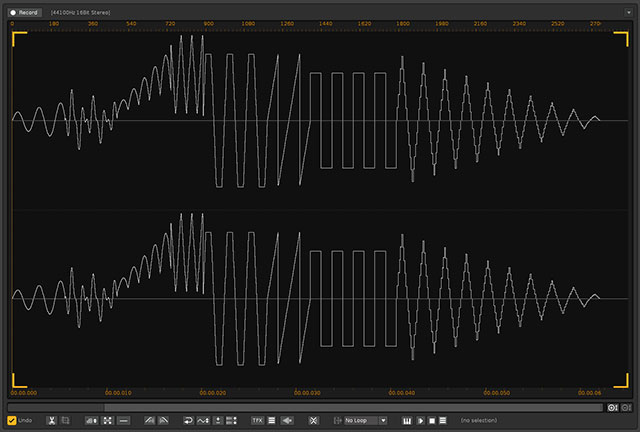

When visualizing an audio waveform, it's common to use a graph with the horizontal axis representing time and the vertical axis representing amplitude. A continuous waveform represents a constant sound, while a discontinuous waveform represents a sound with breaks in between.

Different types of audio waveforms are commonly used in audio production and analysis. The simple sine wave is the most common type, representing a pure tone at a specific frequency. Square, sawtooth and triangle waves are also commonly used in audio synthesis and sound design.

Different types of audio waveforms are commonly used in audio production and analysis. The simple sine wave is the most common type, representing a pure tone at a specific frequency. Square, sawtooth and triangle waves are also commonly used in audio synthesis and sound design.

In addition to simple waveforms, complex waveforms can be created by combining multiple sine waves of different frequencies and amplitudes. These complex waveforms are commonly used in music production and sound design to create rich and diverse sounds.

Audio waveforms can be measured and analyzed using various tools and techniques. One standard tool is an oscilloscope, which displays the waveform on a screen in real time. This allows for visualizing the waveform's amplitude, frequency and phase characteristics.

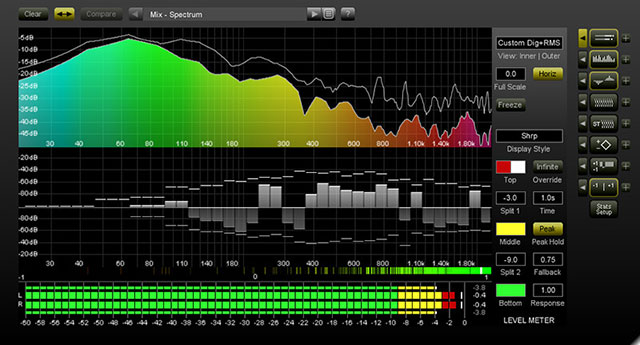

Another tool used in analyzing waveforms is a spectrum analyzer. A spectrum analyzer displays the frequency content of a waveform, allowing for the identification of specific frequencies and the distribution of energy across the frequency spectrum.

Audio waveforms can also be analyzed using digital signal processing techniques like Fourier and wavelet analyses. These techniques involve breaking down the waveform into its component frequencies and analyzing each component separately.

Audio waveforms are essential for understanding and analyzing sound in audio production and analysis. By understanding the different waveforms and how they are measured and analyzed, audio professionals can gain greater control over the sound and improve the overall quality of their recordings.

Audio waveforms have several properties that define their characteristics. Understanding these properties is essential to analyze and manipulate audio signals properly. The following are the most critical properties of audio waveforms:

The amplitude of an audio waveform refers to the strength or intensity of the signal. It's typically measured in decibels (dB) and represents the vertical height of the waveform. A higher amplitude indicates a louder sound, while a lower amplitude indicates a quieter sound. In addition, the amplitude can also convey information about the emotional content of the sound, such as anger or excitement.

The frequency of an audio waveform refers to the number of cycles of the waveform that occur per second. It's measured in hertz (Hz) and represents the horizontal length of the waveform. The frequency determines the pitch of the sound, with higher frequencies corresponding to higher pitches and lower frequencies corresponding to lower pitches.

The phase of an audio waveform refers to the position of the waveform relative to a reference point. It's measured in degrees and indicates the timing of the waveform. The phase can affect the signal's sound quality and spatial perception, especially when dealing with stereo or multichannel audio.

The time domain and frequency domain are two different representations of audio waveforms. The time domain represents the waveform as a function of time, showing how the signal's amplitude changes over time. The frequency domain, on the other hand, represents the waveform as a function of frequency, showing the frequency components that make up the signal. Understanding the relationship between the time and frequency domains is essential for analyzing and processing audio signals.

Audio waveforms have various applications, including music, speech analysis and noise reduction. In this section, we'll explore some of the most common applications of audio waveforms.

Audio waveforms are essential in recording and producing music, movies, podcasts and other forms of audio content. During the recording process, a microphone converts sound waves into electrical signals, which are then amplified and recorded onto a digital or analog medium. These recordings can be analyzed using audio software, and the resulting waveforms can be manipulated to enhance the quality of the recording.

In production, audio waveforms are used to adjust the volume and tone of different tracks and to synchronize the audio with the visual elements of the media. By manipulating the waveforms, sound engineers can enhance the clarity of the audio and create a more immersive listening experience.

Audio waveforms are used extensively in the tuning and analysis of musical instruments. For example, when tuning a guitar, each string's sound is analyzed using a tuner that measures the frequency and amplitude of the waveform it produces. The tuner then provides feedback to the musician, indicating whether the string is in tune or needs adjustment.

Audio waveforms are used extensively in the tuning and analysis of musical instruments. For example, when tuning a guitar, each string's sound is analyzed using a tuner that measures the frequency and amplitude of the waveform it produces. The tuner then provides feedback to the musician, indicating whether the string is in tune or needs adjustment.

Audio waveforms are also used in the analysis of musical performances. By analyzing the waveform of a recorded performance, musicologists and other researchers can gain insight into the nuances of the performance and the techniques used by the musician.

Audio waveforms are critical in speech analysis and recognition. By analyzing the waveform of speech, researchers can study the acoustic properties of different sounds and their interactions with each other. This analysis can help develop speech recognition software and assistive technologies for people with speech impairments.

Speech recognition software analyzes the waveform of the speaker's voice and compares it to a database of known speech patterns. The software then converts the speech into text, allowing users to interact with the computer or other devices using their voice.

Audio waveforms are also used in noise reduction and filtering applications. Noise reduction software analyzes the waveform of the audio signal and identifies unwanted noise that needs to be removed. By applying filters to the waveform, the software can remove unwanted noise and improve the clarity of the audio.

For example, in audio recordings of live concerts, background noise, such as the sound of the audience talking or the hiss of the sound system, can be removed using noise reduction software. In addition, filters can be applied to the waveform to enhance specific frequency ranges, such as the bass or treble, to create a more balanced and enjoyable listening experience.

Overall, audio waveforms are essential to many audio-related applications, including recording and production, musical instrument tuning and analysis, speech analysis and recognition, and noise reduction and filtering. Understanding the properties and characteristics of audio waveforms is essential to making informed decisions when working with audio signals.

Audio waveforms are an essential part of creating and reproducing sound. There are two main types of audio waveforms: analog and digital. Analog waveforms are produced by continuously varying electrical signals, while digital waveforms are created by converting analog signals into digital data that computers can store and manipulate.

Analog waveforms have been used for decades and are still widely used in various audio applications, such as vinyl records and cassette tapes. Analog waveforms are continuous and can take on any value between the minimum and maximum amplitude levels. This produces a more natural and warm sound that some musicians and audiophiles prefer.

On the other hand, digital waveforms are created by sampling and quantizing an analog signal, resulting in a series of discrete values that computers can store and process. Digital waveforms are more versatile and can be manipulated and edited with ease. However, the quantization process can result in losing some of the original signal's information, leading to a less natural sound.

Synthesizers are electronic instruments that can generate various types of audio waveforms. These instruments can create complex waveforms that can be manipulated to create unique and exciting sounds. There are two types of synthesizers: analog and digital.

Analog synthesizers use analog circuitry to generate and manipulate waveforms. They have a warm and organic sound that musicians often prefer. Digital synthesizers, on the other hand, use digital signal processing to create and manipulate waveforms. They offer more versatility and can create a wide range of sounds.

Digital audio workstations (DAWs) are computer software applications for recording, editing and mixing audio. DAWs can record audio from various sources and allow for the manipulation of audio waveforms. They offer a wide range of editing tools, including adding effects, adjusting levels, and cutting and pasting audio sections.

DAWs can also work with both analog and digital waveforms, allowing for seamless integration between different types of equipment. They offer high precision and control, making them an essential tool for modern music production and audio engineering.

Overall, understanding the creation and manipulation of audio waveforms is essential for anyone involved in music production, sound engineering, or other audio-related fields. Individuals can create unique and high-quality sound recordings and productions by understanding the properties and applications of audio waveforms and their creation and manipulation.

To truly understand audio waveforms, being familiar with the various components that make up the waveform is essential. The following are some of the critical elements of an audio waveform.

The envelope of an audio waveform refers to the overall shape of the waveform. It describes how the waveform's amplitude changes over time and is often represented by the ADSR (attack, decay, sustain, release) envelope. The attack phase is the initial increase in amplitude when a sound is first produced, while the decay phase is the subsequent decrease in amplitude. The sustain phase is the level at which the amplitude stabilizes, and the release phase is the gradual decrease in amplitude as the sound fades out.

Harmonics are additional frequencies generated when a sound is produced, in addition to the fundamental frequency. They are integer multiples of the fundamental frequency, and their presence gives a sound its characteristic timbre. For example, a guitar string's harmonics are what distinguish it from a piano string played at the same fundamental frequency.

Transients are brief, high-amplitude bursts of sound that occur at the beginning of a waveform. They are typically associated with a sound's attack phase and give its initial impact or punch. Transients are essential in music production, as they help to create a sense of rhythm and groove.

The spectral content of a waveform refers to the distribution of frequencies present in the sound. It can be visualized using a frequency spectrum, which shows the amplitude of each frequency component of the sound. The spectral content gives a sound its tonal quality and allows us to distinguish between different instruments or voices. By manipulating the spectral content of a sound, it's possible to shape its timbre or alter its perceived location in space.

Overall, the anatomy of an audio waveform is complex and multi-faceted, with many different components working together to create the final sound. By understanding a waveform's envelope, harmonics, transients and spectral content, we can gain a deeper appreciation for the complexity and richness of the sounds surrounding us.

Audio waveforms can provide valuable insight into the characteristics of a sound signal, and analyzing these waveforms can aid in understanding and manipulating sound in various applications. There are several ways to analyze audio waveforms, including visual analysis using waveform displays, spectral analysis using frequency plots and mathematical analysis using Fourier transforms.

Audio waveforms can provide valuable insight into the characteristics of a sound signal, and analyzing these waveforms can aid in understanding and manipulating sound in various applications. There are several ways to analyze audio waveforms, including visual analysis using waveform displays, spectral analysis using frequency plots and mathematical analysis using Fourier transforms.

One common way to analyze audio waveforms is through visual analysis using waveform displays. A waveform display shows the amplitude of a sound signal over time and provides a visual representation of the waveform. Waveform displays can determine a sound's loudness and clarity and identify specific sounds or patterns within the audio. They are commonly used in audio editing software to identify and edit individual parts of a recording.

Spectral analysis involves analyzing the frequency content of a waveform, which can provide insights into the spectral characteristics of a sound. This can be useful in identifying a sound's fundamental frequency and harmonics and recognizing the presence of noise or other frequency components that may need to be removed. Spectral analysis often uses frequency plots, such as spectrograms or frequency response curves.

Spectrograms are graphical representations of the frequency content of an audio waveform over time and can help identify specific frequency components of a sound. Frequency response curves are plots of the amplitude of a sound signal at different frequencies and can be used to analyze the frequency response of audio equipment, such as speakers or headphones.

Mathematical analysis of audio waveforms involves using Fourier transforms to break down a complex waveform into component frequency parts. A Fourier transform converts a time-domain waveform into a frequency-domain representation, which can help analyze the frequency content of a sound. Fourier transforms are often used in audio processing to remove unwanted noise or frequency components and can be used to analyze the spectral range of a sound.

Overall, analyzing audio waveforms can provide valuable insights into the characteristics of a sound signal and can aid in understanding and manipulating sound in various applications. Visual analysis using waveform displays, spectral analysis using frequency plots and mathematical analysis using Fourier transforms are all standard methods of analyzing audio waveforms.

There are several ways audio waveforms can be manipulated to achieve a desired sound or effect. Here are some commonly used techniques:

Equalization, or "EQ," adjusts the balance of frequencies in an audio waveform. It involves boosting or cutting certain frequency ranges to emphasize or reduce specific elements in the sound. Equalization can make a mix sound more balanced or create specific effects, such as making a voice sound "fuller" or a guitar sound "brighter."

Equalization, or "EQ," adjusts the balance of frequencies in an audio waveform. It involves boosting or cutting certain frequency ranges to emphasize or reduce specific elements in the sound. Equalization can make a mix sound more balanced or create specific effects, such as making a voice sound "fuller" or a guitar sound "brighter."

Compression is the process of reducing the dynamic range of an audio waveform. It involves reducing the volume of the loudest parts of the signal and boosting the volume of the quieter parts. Compression can be used to even out the levels of a mix and make it sound more consistent or to add sustain to a guitar or bass sound.

Limiting is a more extreme form of compression that prevents an audio waveform from exceeding a certain level. It's often used to avoid clipping, which occurs when a signal is too loud and causes distortion. Limiting can also be used to "maximize" the level of a mix and make it sound louder and more powerful.

Filtering involves removing specific frequency ranges from an audio waveform. This can be done using high-pass, low-pass, or band-pass filters. High-pass filters remove frequencies below a certain cutoff point, while low-pass filters remove frequencies above a certain cutoff point. Band-pass filters allow only a specific range of frequencies to pass through. Filtering can remove unwanted noise or create specific effects, such as a "telephone" or "radio" effect.

Time-stretching and pitch-shifting allow you to change the tempo and pitch of an audio waveform without affecting its length. Time-stretching involves changing the duration of the audio waveform while keeping its pitch constant. Pitch-shifting consists of adusting the pitch of the audio waveform while keeping its duration constant. These techniques can be used to create interesting effects, such as a "chipmunk" or "demonic" voice, or to make a musical performance fit better with a specific tempo or key.

These techniques allow audio engineers to manipulate audio waveforms to achieve a wide range of sounds and effects, from subtle corrections to radical transformations.

Audio waveforms are essential to modern music production, speech analysis, noise reduction and filtering. Working with audio waveforms involves various techniques, and best practices should be followed to achieve the best possible results.

Proper gain staging is crucial to get the best signal-to-noise ratio when working with audio waveforms. Gain staging involves setting each processing device's input and output levels in the signal chain to achieve optimal levels.

When working with digital audio, it's important to avoid clipping, which occurs when the signal exceeds the maximum digital level. Clipping can result in distortion, which can be difficult or impossible to remove later in the processing chain.

Dynamic range is the difference between an audio waveform's loudest and quietest parts. Understanding dynamic range is essential to achieve a balanced mix and to avoid over-compressing or over-limiting the audio waveform. Compression and limiting are techniques used to control dynamic range, but overuse can result in a loss of dynamics and a flattened sound.

Clipping and distortion are undesirable in audio waveforms. Clipping occurs when the waveform exceeds the maximum level, resulting in a flattened peak. Distortion occurs when the waveform is overloaded, resulting in an unnatural and unpleasant sound.

To avoid clipping and distortion, it's essential to use proper gain staging and to monitor the waveform for any signs of distortion. Using high-quality equipment and avoiding excessive processing can also help prevent clipping and distortion.

Processing techniques such as equalization, compression, limiting, filtering and time-stretching/pitch-shifting can be used to manipulate audio waveforms to achieve a desired sound. However, using these techniques judiciously and clearly understand their impact on the waveform is important.

For example, excessive use of equalization can result in a thin or muddy sound, while over-compression can result in a loss of dynamics and a flattened sound. Filtering can remove unwanted noise or frequencies but also remove essential parts of the waveform.

Understanding and working with audio waveforms requires combining technical knowledge and creative skills. By following best practices and using processing techniques judiciously, you can achieve optimal results and create high-quality audio productions.

In summary, audio waveforms are fundamental to audio production and analysis. They are representations of sound that capture the changes in air pressure over time. Understanding the properties of audio waveforms, such as amplitude, frequency, phase and the difference between the time domain and frequency domain, is essential for manipulating and analyzing them effectively.

Audio waveforms are created through analog or digital means, and various techniques, such as synthesizers, digital audio workstations and mathematical analysis, can be used to manipulate and analyze them.

Proper gain staging, understanding dynamic range and avoiding clipping and distortion are all critical best practices for working with audio waveforms. It's also crucial to use processing techniques appropriately to achieve the desired result without compromising the quality of the waveform.

In conclusion, understanding audio waveforms is critical for anyone involved in audio production and analysis. Analyzing and manipulating waveforms effectively allows audio professionals to create high-quality audio that sounds great across various platforms and media.